NSA “touches” more of Internet than Google

In deep packet inspection, it's not the size of the data that matters.

Equinix's co-location facility in San Jose,

California, one of the network exchange sites likely tapped by the

NSA's "one-side foreign" surveillance.

Photo: Peter McCollough/Wired.com

According to figures published by a major tech provider, the Internet carries 1,826 Petabytes of information per day. In its foreign intelligence mission, NSA touches about 1.6 percent of that. However, of the 1.6 percent of the data, only 0.025 percent is actually selected for review. The net effect is that NSA analysts look at 0.00004 percent of the world's traffic in conducting their mission—that's less than one part in a million. Put another way, if a standard basketball court represented the global communications environment, NSA's total collection would be represented by an area smaller than a dime on that basketball court.The numbers are no real surprise—we've already discussed how the laws of physics would make it impossible for the NSA to capture everything, or even a significant portion of everything, that passes over the Internet. But they're also misleading. In the world of deep packet inspection, verbs like "touch," "select," "collect," and "look at" don't begin to adequately describe what is going on or what information is extracted from traffic in the process. Considering all that's within what flows across the Internet, 1.6 percent could hold a significant portion of the metadata describing person-to-person communications.

How much is 1.6 percent?

The dime on the basketball court, as the NSA describes it, is still 29.21 petabytes of data a day. That means the NSA is "touching" more data than Google processes every day (a mere 20 petabytes).While 29.21 petabytes is a fraction of the overall traffic on the Internet, it is the equivalent of the traffic that passes through several major Internet exchanges each day. It amounts roughly to 2.77 terabits per second—more than the average throughput of the Equinix exchange network, the CoreSite Any2 Exchange, New York International Internet Exchange (NYIIX), and Seattle Internet Exchange (SIX) combined. In other words, the 1.6 percent of the total of Internet traffic "touched" by the NSA could easily contain much of the traffic passing through the US' core networks. It can certainly include all the traffic inbound from and outbound to other nations.

Those exchanges are likely the primary targets of the NSA's Special Source Operations "one-end foreign" (1EF) network tap operations. The remaining sources are overseas taps, including "FORNSAT" satellite communications intercepts and data shared by friendly foreign governments' own network surveillance—such as Germany's foreign intelligence agency, the Bundesnachrichtendienst (BND), as detailed in a report published today by Der Spiegel. There are also covert sites set up by the NSA's Special Collections Service, likely including targeted taps of networks in even "friendly" countries.

The NSA has approximately 150 XKeyscore collection points worldwide. To reach 29.21 petabytes per day, XKeyscore sites pull in around 190 terabytes a day. And to keep the three-day "buffer" XKeyscore holds of captured traffic, that would mean the sites have an average of about 600 terabytes of storage—the equivalent of a fairly manageable 150 4-TB drives.

Pick a peck of packets

Regardless how much data flows through the NSA's tap points, all of it is getting checked. While the NSA may "touch" only 29.21 petabytes of data a day, it runs its digital fingers through everything that flows through the tap points to do so.The NSA's XKeyscore uses packet analyzers, the hardware plugged into the network that diverted Internet data is routed down, to look at the contents of network traffic as it passes by. The packet analyzers use a set of rules to check each packet they "see" as it is read by the analyzers' software into memory.

Packets that don't meet any of the rules that have been configured are sent along unmolested. In a "normal" network filtering situation, these packets would then be forwarded down the wire to their recipient, but in the NSA's case the packets are just clones of the packets that have already passed onto their intended destination. They are just sent to /dev/null—flushed away forever.

Packets that match one or more of the rules get routed to processing servers for further analysis. Those rules can be very broad—"grab everything with an IP address in its header that is outside the United States," for example—or they can look for very specific patterns within packets, such as those of VPN and website log-ins, Skype and VoIP traffic, or e-mails with attachments. In some cases, a filter may capture only the initial three-part TCP handshake of a connection between two systems, or it may only look for specific patterns of Web requests from clients. The rules could also include "if-then-else" logic: "If this is a packet that is part of an e-mail message I saw going by earlier and it includes attachment data, then grab it."

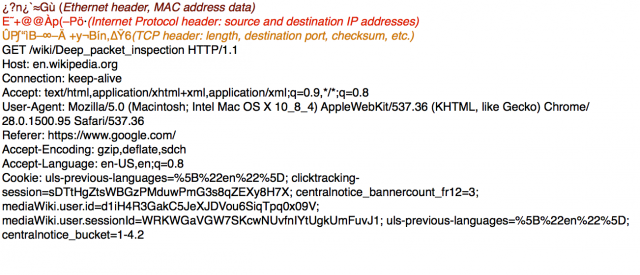

Enlarge /

A single IP packet, snatched from the stream, can tell a lot. This HTTP

request tells anyone who reads it where I am, what I'm looking for,

where on the Web I'm coming from, and a collection of cookies that can

be used to track me later.

Sean Gallagher

How I learned to stop worrying and love packet capture

There's a lot of chaff that gets ignored by XKeyscore sites. They ignore Web server responses, DNS traffic, content distribution network traffic, and the other administrivia and overhead that is involved in making the Internet work. At least in theory, they largely ignore domestic Internet traffic that doesn't transit outside the US. Though depending on where you live in the US and where you're connecting to, a significant portion of your network traffic may pass through Canada or follow other paths that could expose you to surveillance.And, at least up until this point, all of the processing is being done without human intervention or human eyeballs being involved. The data is kept in buffer for three days and heavily indexed for search, with metadata extracted and held for a month. Still, unless the content matches a cross-referenced search like "all Excel documents e-mailed in Iraq," it will probably avoid human eyes.

XKeyscore is also integrated into Marina, the NSA's phone call metadata database. That allows for the trolling of Internet traffic for phone numbers of interest and for quick searches of raw data in XKeyscore's cache by analysts.

After XKeyscore's processing servers churn through the raw captured data, they forward extracted information such as metadata, attachments, and other content related to cases assigned a National Intelligence identifier over the wire to PINWALE, the NSA's in-house "big data" analysis platform for Internet intercepts (believed to be based on the Accumulo data analysis engine). This has to be done with a good deal of care not to overwhelm the NSA's private network backhaul to its data centers and reduce the performance of XKeyscore searches. So by the time the data has gone through this many levels of refinement, the NSA says that only 0.025 percent of the data "touched" by its systems each day is "selected for review" and sent back.

That's 7.47 terabytes a day of connection metadata, cyber-attack targeting data and virtual private network intercepts, e-mail attachments, and instant messages. Really, that's nothing, right? The 2.66 petabytes a year of analytics that get rolled up in front of the eyeballs of analysts at NSA, in the DOD, and various other intelligence and law enforcement agencies is but a pittance.

Of course, that doesn't cover the fact that the NSA is, in effect, collecting 10.411 exabytes of short-term searchable content in XKeyscore. They extract information from it that is much more valuable (and potentially more intrusive) than the raw data.

Promoted Comments